Introduction

Azure Devops is the ‘Github’ of Microsoft basically. It contains container registries, pipelines, git repositories, an test framework, sprint/task boards and many more things. For many companies this is the defacto standard when it comes to doing DevOps based work.

Lately I have been working a lot with the Azure Devops Git/Pipeline options within Azure DevOps and must come to the conclusion, that a lot of the things we are using, are not easy to find in the courses online. For this I tried to combine the options that I use in this blog post. This blogpost will be periodically updated when I found out new things, so that this combines all my knowledge in this region. I will probably address the newer items in a seperated blog entry as well.

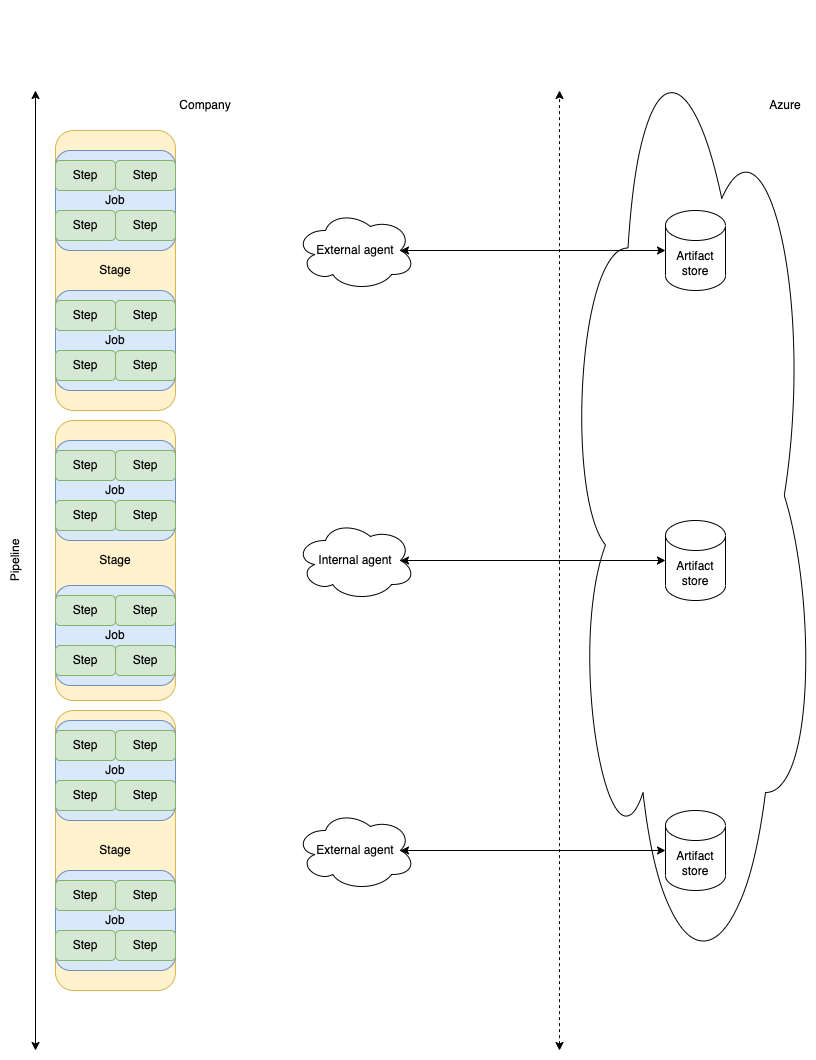

Architectural overview of the blogpost

To make clear what all the different bits and pieces of Azure mean and to make it more

visual, I created a little diagram:

The Diagram was created with DrawIO, and reflects a few items that will be explained later. The yellow boxes are “stages”, they are filled with “blue” job boxes, a stage has one or more jobs, and subsequently the green boxes form steps, the lowest but most important parts in a job.

The Diagram also demonstrates the Azure cloud on the right handside, and “Artifact” stores, they are “one” within the cloud, but not to clobber the drawing, I made three of them. Effectively they are all the same!

In addition there are three agents referenced, two different types. Each stage / job runs on it’s own agent and can have different policies applied to them. We have the External Agents (EA) and Internal Agents (IA) in this drawing to make clear why Artifacts can be helpful.

Ofcourse all the information can be found on the internet, one of my main sources of information for Azure DevOps is Microsoft itself, and there are many useful external references as well. If you have comments, or want to discuss with me, see the contact menu item on top on how to contact me. Do note that not every option from Azure DevOps is mentioned here, there are far too many and I simply dont use everything, but I use a lot of the options available.

Pipelines

What are pipelines actually? I always visualize the pipeline as a factory. Something gets in (Resources) is being processed on various levels, and something gets out (product). A good visualisation is likely a car factory. Some metals and required resources get in, the framework gets build, doors, tires, electronics, etc etc. are being added and optimized based on request, the car will be colored according to the customer demands, and in the end a test will be concluded and the product delivered to the end user.

All this, is largely done automatically inside the factory. If you explain that as ‘a pipeline’, then you have an understanding on what a pipeline does.

You can also call it an advanced job scheduler if you are an old school Unix guy like I am. In practise the pipeline orchestrates and enriches the jobs that need to run to form the end result. This could be schedule based or trigger based on a commit (checkin) to a repository, merge request, etc.

Explanation about pipelines within Azure

Like every vendor, the pipeline implementation all differs in some bits and pieces. If you use the above example in your mindset, you can most likely extract the relevant data from the product you are using. It could be that some of the options are named differently or used differently. I myself have used Gitlab CI/CD to automate the delivery of these webpages amongst, others, but also used Jenkins at my previous employer to generate periodic reports and in the past I used this to deliver these webpages. At work we use Azure to deliver CI/CD capabilities. All three have the same methodology but different implementations. Practise in real life makes a difference so do that in case you are familiar with one product and not yet with Azure.

stages, jobs, steps

What are stages

You can see stages as as a set of “jobs” that form a coherent action. For example if you have an upgrade process in place, you will probably make a backup first, perform actions and update your configuration database and cleanup the backup (if succeeded).

If you would create a high level drawing out of that, you would seperate them in three steps likely: pre-actions, actions, post-actions. each of those actions is a stage.

A stage can depend on another stage, imagine you need to prepare an image in a stage, and later us that image to continue your deployment. Selecting:

- stage: "Name_earlier_stage"

[....]

- stage: "NameStage"

dependsOn: "Name_earlier_stage"

will make your second stage dependent on your first stage.

What are jobs

As you could have read in the previous part about stages, a stage is divided into a set of jobs. A job is a set of steps that form a logical entity as well. In the above example, a job could be to do pre-actions. Imagine that this job will do a couple of things (steps) before it finishes all these pre-actions. In Unix you would likely call this a script, that has several functions or actions taking place inside it.

In case of the pre-actions, you could do that into some smaller jobs like:

- do validation tests

- make backup

- store backup

- update configuration database

- set machine into maintenance (for alerting etc)

- notify monitoring department or person on-call

- etc.

All these jobs have several actions taking place inside these jobs, we will get to them in the next section.

What are steps

Steps are the lowest part inside the Azure Pipeline, while on the bottom level they are certainly one of the most important onces. Here actions actually take place, where the stages and jobs form and combine the logical actions taking place they do not hold actions themselves, the steps actually do something.

Imaging the above examples and we zoom in on the ‘make backup’ job, that could have several steps:

- Prepare environment

- fetch keyvault secrets

- install required dependencies

- download company internal applications

- Login to the current machine to obtain token

- Use token to call backup API

- Download backup into staging directory

- Upload artifact into the artifact store (either pipeline artifact, or Azure artifact)

Each step runs on the agent that runs the job, but could have different tasks or scripts that will be executed, like calling the API could be done with a Powershell script that is used internally, but fetching the keyvault secrets could be a task provided by Azure or company-wide.

The difference between tasks and scripts

The difference is basically quite simple. A task is basically a wrapper around a script. If you have the Powershell@2 task, that takes several options, that abstracts away some parameters that you would normally have to write. A common one that you can find there is ‘workingDirectory’, which specifies where the script will be executed. It also allows you to either perform an inline script (where the input then is a variable for the task) or to reference an external script.

A script does not have that wrapper around it, so you can customize it better and do whatever you need to do, but you need to do everything yourself. I find myself using a combination of both, but for AzureKeyVault it is very convienent to use the task.

What are the @digit ’s in tasks?

The @0, @1, @2, etc are the different versions of the task, this way you can evolve your task over time with new options, but people can still use the defined version to keep up with that.

Practical examples within the pipeline

Logical operators

Logical operators are often found in languages, They allow you to follow english like decisions ‘if something is true, then do action A, else if something else is true, do action B, and if nothing is true, well then do action Z’

In most languages that is quite simple:

if value in string:

For Azure I find it a bit harder, you have operators to ‘wrap’ a condition in. Like ’eq’, ’ne’, ‘contains’, ‘containsValue’, ‘and’, ‘or’, and many more.

They are used like this, where both perform similar validations. In this example both checks need to be true. The first check, is parameters.key equal to yourValue, and the second check does ‘key2’ contain the string ‘yourOtherValue’. If so, the function is true and will perform what you combine it with.

and(eq(parameters.key, 'yourValue'), contains(parameters['key2'], 'yourOtherValue'))

I find this a bit harder to read especially when the and/or operators take a few additional arguments that you want to test against. It can become unreadable quite quickly, so write out the thing you want to do first, so that you have it ‘drawn’ or ‘designed’.

Azure calls them “Functions”.

If then else example

Within Azure you can also do an if then else ofcourse. But you need to be aware of the indentation.

job:

task: AzureKeyvault@0

parameters:

${{ if eq(['parameters.test'], 'true') }}:

vault: test

${{ else }}:

vault: something else

If value contains

Likewise using the if / else construct, you can also see whether a part of a text is included in a parameter. This can be useful if you have a naming conventation or unique indicator that you can use to define certain parameters.

job:

task: AzureKeyvault@0

parameters:

${{ if contains(['parameters.test'], 'your_name') }}:

set_value: to what you need

Loop through a list

parameters:

- name: loop_list:

type: string

displayName: A nice name for the loop_list

values:

- a

- b

- c

job:

${{ each value in parameters.loop_list }}:

task: AzureKeyvault@0

parameters:

key: value

Templating

Ofcourse, you will probably see that the things you need to do to perform tasks is having overlapping sections between various pipelines. If you always need to fetch your AzureKeyVault secrets with certain custom stuff used, then you need to do that for every pipeline again and again… right?

Like with regular coding you can ’template’ these things. In Azure terminology a template is a file either from the local repository, or from a remote ‘resource’ repository that contains a repeatable action that you can include in your pipeline.

If you work for a bigger organisation, or use sections that you need to re-use between pipelines, this saves a lot of time and maintenance as well. A bugfix or new feature is propagated to each pipeline instantly and effective the next time you run it. Ofcourse, on the negative side, a bug is also propagated to all pipelines using the code.. so test it well!

Internal resource

With internal resource I mean specifying a resource that is within the current scope/repository like:

jobs:

- template: localpath/to/template.yml

External resources

One of the options to include a file is by using a “resource”. This goes as follows:

resources:

repositories:

- repository: myrepo

type: git

name: yourorg/myrepo

Later in the code you can reference this by:

jobs:

- template: path/to/template.yml@myrepo

Where myrepo specifies the name of the repository as named at ‘- repository: myrepo’ You can ofcourse include more repositories and reference them accordingly.

Scheduling and/or triggers

Scheduling and triggers define when a pipeline gets executed if not done manually.

If you want to use a cron-alike way use:

schedules:

- cron: "59 23 * * *"

displayName: Just before midnight

branches:

include:

- yourbranch

always: true

where the pipeline will run one minute before midnight using the cron definition type of a schedule. It will only run for ‘yourbranch’ in this case.

If you do not want to use this, either remove: schedule: and/or set:

trigger: none

to disable trigger based execution of your code.

Variables and Parameters

One of the dynamic features that most CI/CD tools feature is the variables. Azure also uses them and extends this by also using parameters.

What are variables

Variables are oneline objects that specify an identifier that you can re-use throughout the entire pipeline. They can be changed and/or exported from a task or job output so that you can use this in a different job as well (which runs on its ‘own’ agent and potentially on a very different machine, unaware of the original job / agent).

For example:

variables:

- name: my_project_name

value: "www.evilcoder.org"

Where you can use this throughout the pipeline with $(my_project_name), which will print ‘www.evilcoder.org’ in this example.

But, the $() is common for scripting languages like ‘shell’, yet there are other ways to reference a variable as well. This is called a runtime variable, it will be (hopefully) filled before it is ran, and the variable is available at that moment. (task level)

Azure also supports so called Template expressions, which are changed at compile time of the pipeline and cannot be changed afterwards. An example is ${{ variables.my_project_name }}, which has a different syntax but yet it will still print ‘www.evilcoder.org’ in this example.

Another way to reference them is by using runtime expressions, which are processed at runtime, (pipeline level). They are referred like: $[variables.my_project_name] in this case. You will see this being used when doing comparisons or seeing whether a variable contains a certain string.

What are parameters

Parameters are more highly sophisticated variables in a nutshell. You can specify the type of the object, provide values for them (if the list is long enough you get a dropdown), etc. What I notice when using these parameters, when you run a pipeline manually, you get a popup asking about the details of the parameter and unless you have a default already, you need to specify them or select them from the menu.

They are converted just before the pipeline runs and thus are static. If you need more dynamical items then variables are much more flexible. However, I prefer the use of parameters to have a bigger amount of control over the type.

An example parameter:

parameters:

- name: Webname

displayName: Hostname of the webservice

type: string

default: "www.evilcoder.org"

values:

- "https://www.remkolodder.nl"

- "https://www.evilcoder.org"

- "https://www.elvandar.org"

This will give you a selectable for the Webname parameter, which you can use later on in a task like this:

- script: |

hugo --baseURL ${{ parameters.Webname }}

which will put the baseurl for a hugo site to the site specified. Note that I used the longer version of the argument for hugo to keep it readable.

Difference between variables and parameters

As mentioned in the above text, a variable can be changed on the fly when needed, or exported in a job to be re-used in a subsequent job. Parameters are more static and only converted just before runtime of the pipeline. That makes then immutable during the run, but you have higher grip on what kind of parameter you expect and even limit the amount of input it can handle (see the values above).

Simultaneous execution of something

You can run several items in parallel, for that you need a strategy called: “matrix”.

What this does is it uses the keys in the matrix (the first item that you select on the same indent), and loop through them with the key/values in it that you can use in your task or script.

parameters:

- name: matrix_you_will_be_using

type: object

default:

name_1:

variable_a: "stringA"

variable_b: "stringB"

name_2:

variable_a: "stringAA"

variable_b: "stringBB"

[...]

jobs:

- job:

strategy:

matrix: ${{ parameters.matrix_you_will_be_using }}

steps:

- script: |

echo $(variable_a)

echo $(variable_b)

Where it will do the loop two times, one for name_1 and one for name_2, and print the variables: stringA and stringB for the name_1 print, and stringAA and stringBB for the name_2 print. This can run concurrently, so that if you need to do a certain test a lot of times using the same input variables but with different logic included, you can do that en-masse. You can limit the amount of concurrent running items with : ‘maxParallel’.

The yaml structure defined above, starts each iteration with the name_ keys, and then you can specify them with just their short name, if you compare that by running a loop through the object with “each” for example:

- ${{ each ShortName in parameters.matrix_you_will_be_using }}:

- script: |

echo ${{ ShortName.variable_a }}

echo ${{ ShortName.variable_b }}

which looks much different then the stategy above.

Artifacts

As you can see in my example image on top, there are a couple of Artifact stores mentioned, and some airgap lines. These airgap lines suggest that you cannot access the data from one stage to another, and with the difference in extern and internal agents, you could be limited by internal policies as well. Imagine that you cannot fetch anything from the internet via the Internal Agents (IA), then you always need to fetch a resource from the External Agents (EA). You could see the difference between them as airgap.

If you allow your Agents to access the Azure Cloud though, then you can use the Azure Artifact resource as airgap proxy. You can see that as a huge store where you can upload ‘artifacts’, or as I reference them as ‘zip’ files, where you upload a resource from the EA, and download them ater on the IA. In this example it could be used to create a pre-actions report, upload that via Azure Artifacts and download it in the next stage on the IA to do something with that pre-actions report.

Artifact Types

There are two types of artifacts, that I am currently familiar with, those are the Pipeline Artifacts and the Azure Artifacts,

Pipeline artifact

This type of artifact only survives a pipeline lifetime. They are not billed either, and artifacts generated during the run will be there until the pipeline results are deleted. This means that if your retention period for pipelines are 10 days, then after 10 days the artifacts included in that pipeline is removed as well.

The usage is quite simple, An important variable within Azure DevOps is the: $(Build.ArtifactStagingDirectory) variable. This variable marks the staging directory where ‘objects’ are stored temporary before being placed in a artifact or whatever you are going to do with it. Normally relevant files are copied there using a ‘CopyFiles’ task and then put into an artifact like:

- task: PublishPipelineArtifact@1

displayName: 'Publish'

inputs:

targetPath: $(Build.ArtifactStagingDirectory)/webroot/**

${{ if eq(variables['Build.SourceBranchName'], 'main') }}:

artifactName: 'webroot-prod'

${{ else }}:

artifactName: 'webroot-test'

artifactType: 'pipeline'

Afterwards in subsequent stages or jobs, you can download this webroot-prod or webroot-test artifact (which is indeed the name of the artifact)

- task: DownloadPipelineArtifact@2

inputs:

artifactName: 'webroot-test'

targetPath: $(Build.SourcesDirectory)/webroot

which will fetch the latest version of the webroot(-prod | -test) artifact inside the pipeline. You can download resources from other branches or pipelines as well, see the reference at Microsoft

Azure Artifacts

I use these kind of artifacts a lot, they are billed though so you should consider your storage options and funding before using this.

Azure Artifacts use incrementing numbers to identify version numbers. You can mention a specific version id yourself ofcourse, which could be handy if you ‘git tag’ your code as well to point to certain release, and create an artifact out of that with the same version. Unlike the ‘PipelineArtifact’ tasks, that well quite well describe the task at hand, Azure Artifacts use the UniversalPackages task. By default the Artifactstagingdirectory is used to upload all files under it to the Azure Artifact store. Note well, if you use the GUI to generate the task for you, you can select the feed etc. This will be converted into it’s UUID if transformed into YAML. That might be quite hard to read, so I suggest converting them to your actual feedname and packagenames instead.

An example on how to create an Azure Artifact with your own tags (Assume this version is in the $(git-tag-version) variable:

- task: UniversalPackages@0

displayName: Create latest webroot artifact

inputs:

command: publish

publishDirectory: '$(Build.ArtifactStagingDirectory)'

vstsFeedPublish: 'Yourproject/your-generated-feed'

vstsFeedPackagePublish: 'webroot'

versionOption: custom

versionPublish: '$(git-tag-version)'

packagePublishDescription: 'Webroot for website'

and later on you can download that in a different job and/or stage (make sure you depend on this if you need to have the latest version generated inside your pipeline, else you might end up downloading a different version, or the version you want to have cannot be found. To download the latest version specify ‘*’. Else specify the exact version number.

- task: UniversalPackages@0

displayName: 'Download latest webroot version'

inputs:

command: download

vstsFeed: 'Yourproject/your-generated-feed'

vstsFeedPackage: 'webroot'

vstsPackageVersion: '$(git-tag-version)'

downloadDirectory: '$(Build.SourcesDirectory)\webroot'